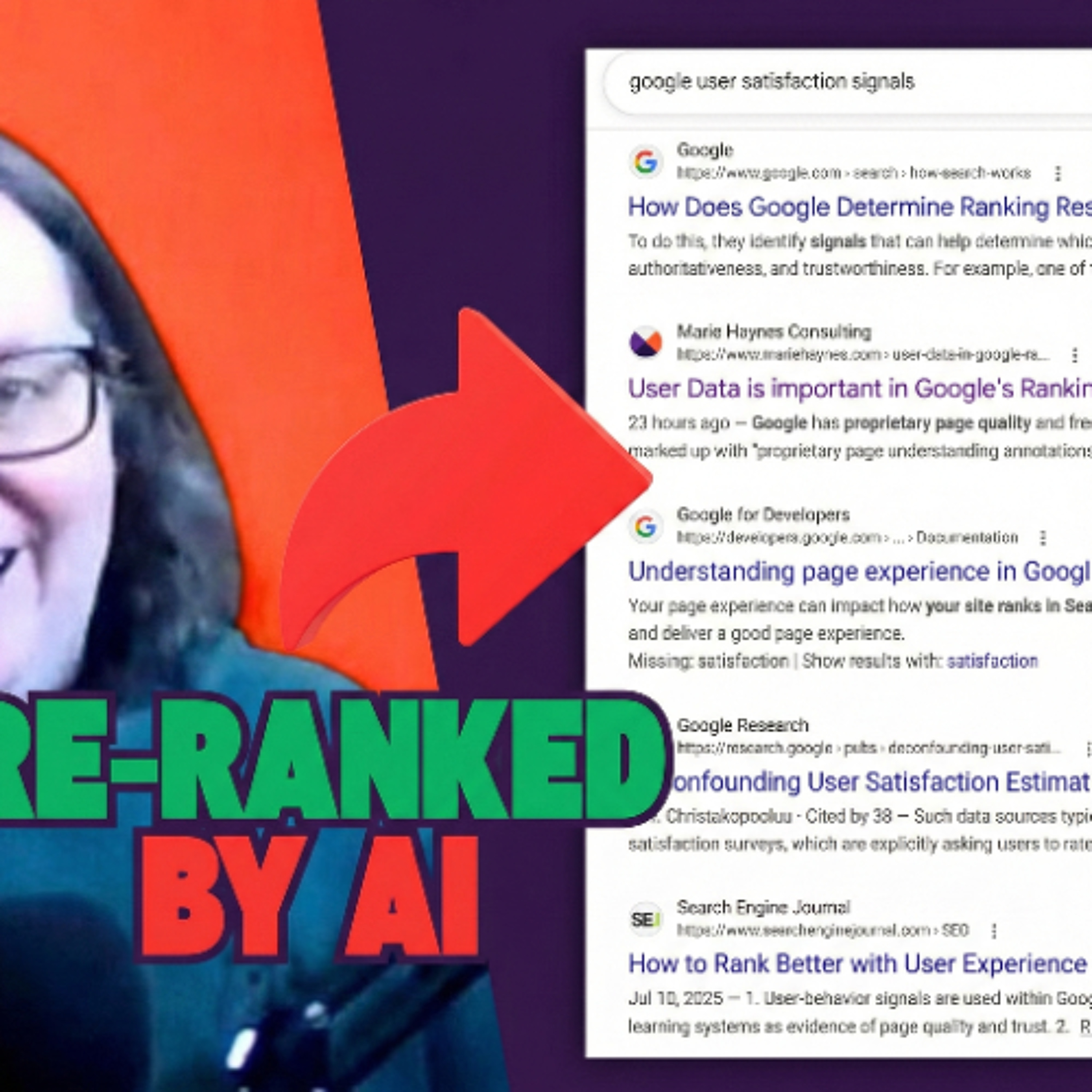

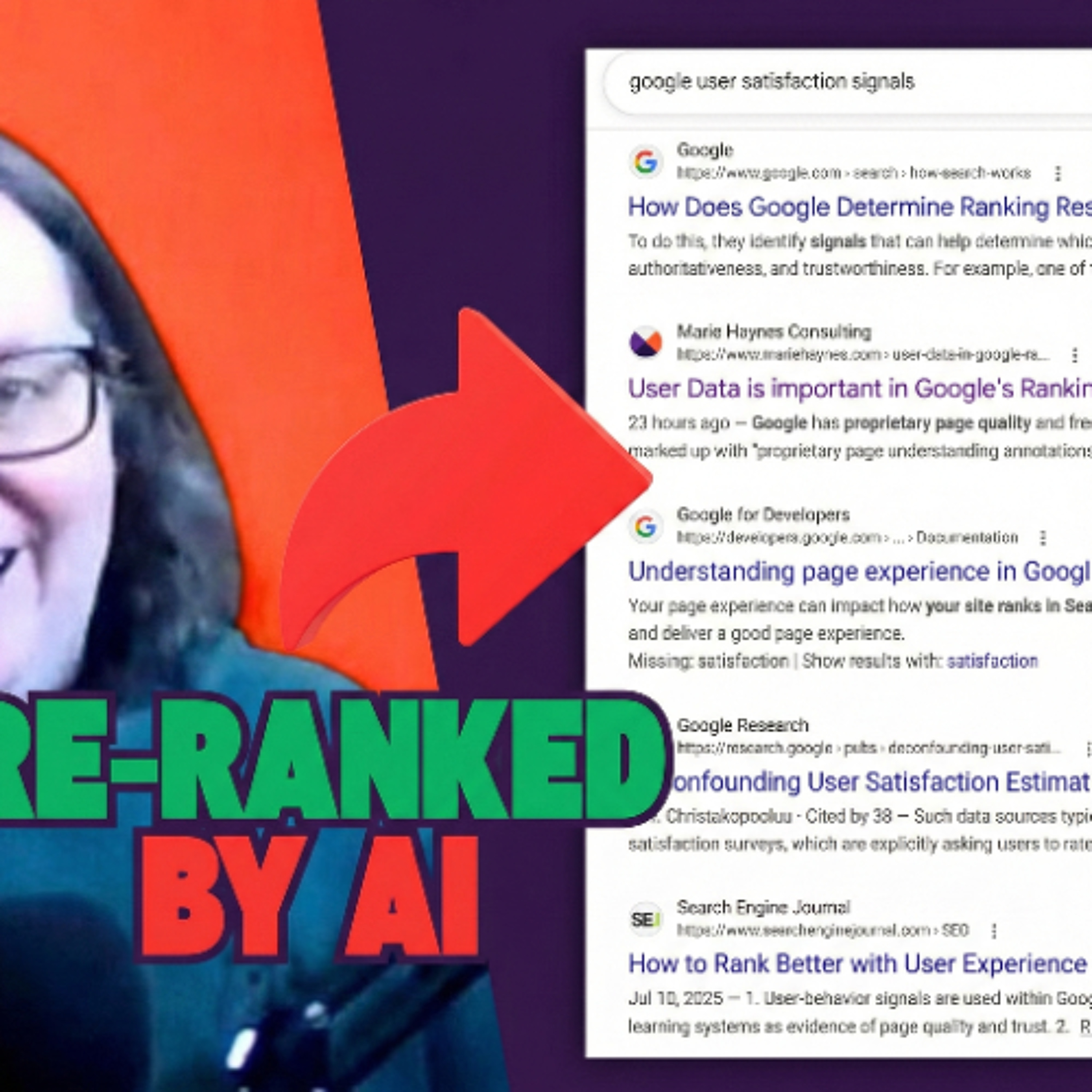

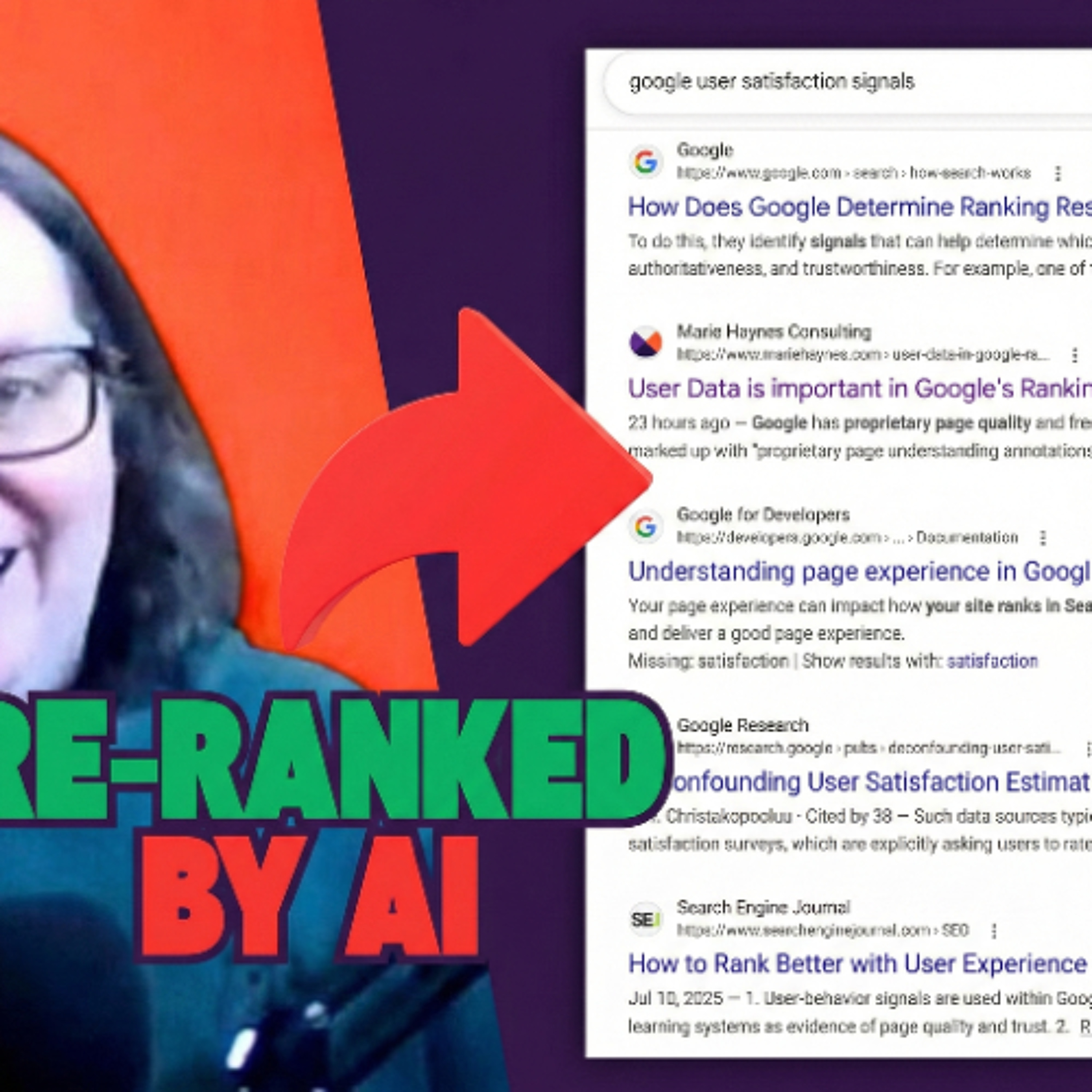

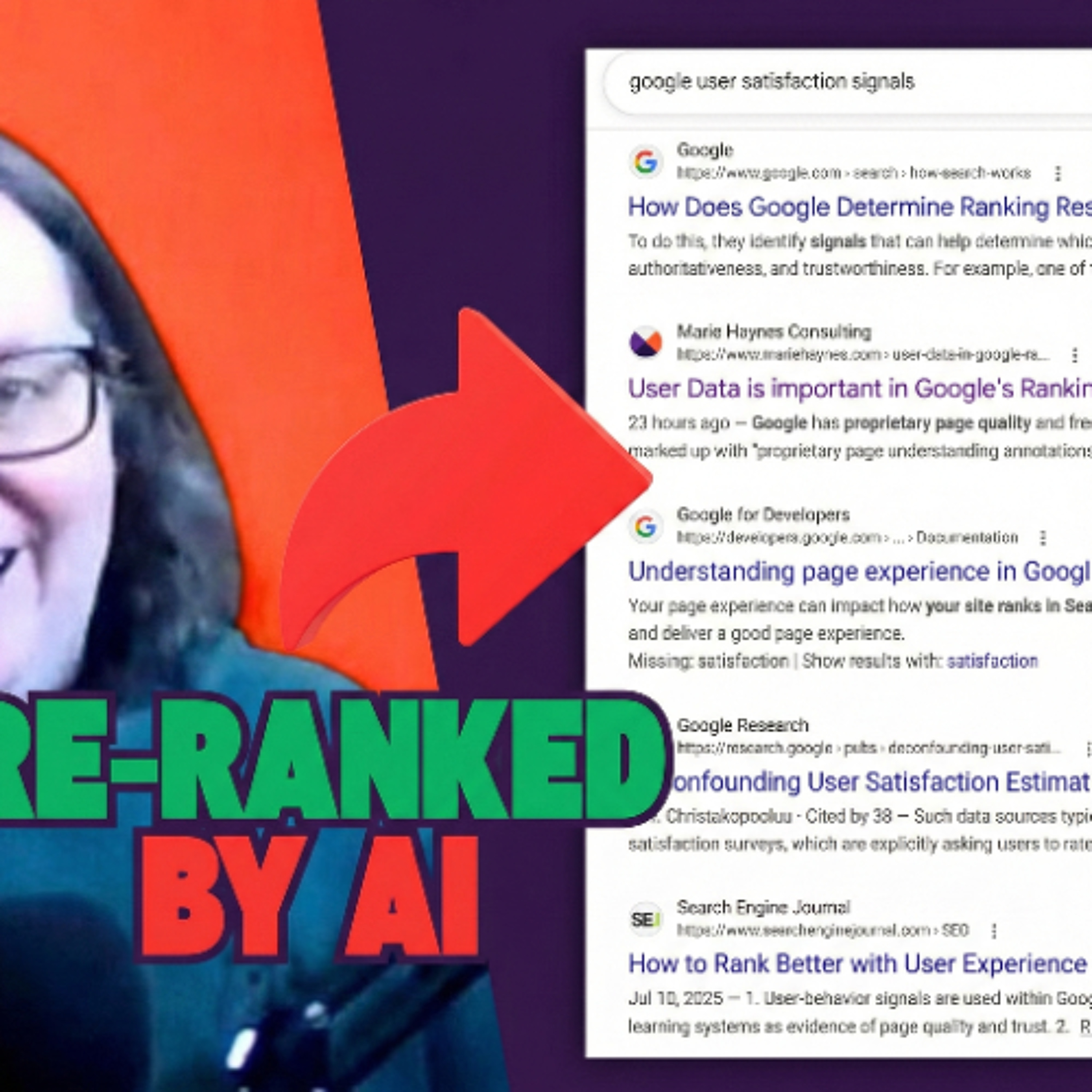

Did you know Google "re-ranks" search results using AI that is trained on user data and quality rater scores? In this video, I break down revelations from the DOJ trial, including the "Glue" system, RankEmbed BERT, and why user satisfaction is now the most critical factor for SEO.

00:00 - Intro: Why user satisfaction is the most important ranking factor.

00:49 - Google’s Quality Raters Guidelines & Helpful Content documentation.

01:36 - Marie’s Theory: Traditional algorithms vs. AI re-ranking systems.

02:35 - Why Google says "Don’t chunk your content" (Danny Sullivan).

03:00 - Vector Search & Embeddings: How AI retrieval actually works.

05:40 - The DOJ vs. Google Trial: Leaks on user-side data.

07:44 - The "Glue" System (NavBoost): How Google tracks clicks & hovers.

09:03 - "Instant Glue": How search adapts to new intent (Nice vs. nice).

10:20 - RankEmbed BERT: The deep learning model Google hid.

12:21 - How AI re-ranks the top search results.

13:03 - BERT Training: Wikipedia, Books Corpus... and romance novels?

15:18 - The 3 Deep Learning Models: RankBrain, DeepRank, RankEmbed.

17:40 - RankBrain: Why it only re-ranks the top 20-30 results.

18:47 - The Helpful Content System: A machine learning classifier.

21:18 - March 2024 Core Update: The evolution of helpfulness signals.

23:00 - Frozen vs. Retrained Google: How algorithms are tested.

26:01 - Why Google runs 17,000+ live traffic experiments.

27:16 - Does Google use Chrome data for rankings? (DOJ evidence).

28:32 - The "AI Mountain": Why AI-generated traffic often crashes.

29:30 - How to optimize for user satisfaction (beyond keywords).

31:00 - Liz Reid on AI Overviews: Why users click for depth.