455 episodes

We explore the synergy of humanoid robots and LLM AI. This episode delves into how robots can learn and interpret their environment in human-like ways, based on a key video listed below. Whether or not you view the video, the discussion offers deep insights into AI's evolving role in human interaction. Transcript: https://otter.ai/u/VqiTSDMDLAKcaF1XuAH8ExhJnLE?utm_source=copy_url References: https://youtu.be/Sq1QZB5baNw?si=dAxLQIws3xkra_mf https://spectrum-ieee-org.cdn.ampproject.org/c/s/spectrum.ieee.org/amp/prompt-engineering-is-dead-2667410624 https://arxiv.org/abs/2402.17764v1 https://www.emergentmind.com/papers/2402.17764

We delve into spatial computing today and discuss Apple's Vision Pro face computer. Everyone in the club2030 group is very interested in augmented reality and virtual reality, and the release of thApple Vision Pro, seems to meet many thresholds that make us surprisingly optimistic about its potential. We discuss aspects we like as well as what we thought was going to be a challenge. Whether you are already watching this space or are new to this concept of a spatial computer from Apple, you'll get a lot out of this conversation. Resources: https://www.barnesandnoble.com/w/rainbows-end-vernor-vinge https://www.tomsguide.com/reviews/rabbit-r1 Transcript: https://otter.ai/u/h61-B-2G9RdWT4gQuguLLDtkkx0?utm_source=copy_url

The compliance death curve is something I’ve been working on as an evolving concept that tries to explain how companies fight compliance governance and standardization efforts, something that is critical to platform team and infrastructure operations. Today we try to decompose some of the mathematics that I've been using into more universal, more easily understood components. We built a compliance flywheel that I found really fascinating which you can see an example of that work in our podcast description. It could also be helpful to check out my previously recorded compliance death curve talk that has been released. Resources: https://www.youtube.com/watch?v=4RUKsakKZI0 Transcript: https://otter.ai/u/k9q5ZZ81Hm-EAAtfkVVtKNNqXwE?utm_source=copy_url

TechOps series episode 3 covers how to automate against API's. We discuss exactly the ways in which you can use API's effectively, and ways you can run into trouble. We also discuss how we should be consuming API's, both as a consumer but also in times when we have produced API's. Many ideas discussed were pulled from learning how people consume our API's and what we can do to help make them better and safer. Enjoy this broader TechOps series where we are diving in deep in tips and techniques that improve your journey as an Automator. https://otter.ai/u/5akxcG83FBS1m9PBUnB4rjLzWac?utm_source=copy_url Image by Dall-E

Our quarterly book club meeting we picked Never Split the Difference by Chris Voss. This book is about negotiation, which we found fascinating because we are all entrepreneurial in some way and handle sales. Next quarter, we'll talk about a related book that we brought up, which is The Two Butt Rule. https://otter.ai/u/g1zFW444R5NseB8rsVe4StMYWEU?utm_source=copy_url

How can we understand agility and adaptability? In this discussion, we get very concrete about the differences between agility and adaptability and why that's important for you as you go on your own innovation journey. This includes looking for places where standards can be applied and accelerate your team, where it's too early, and learning iterations that we would call agile processes more appropriate. We also discuss how teams get caught in the middle between standardization and agility. Transcript: https://otter.ai/u/vsWqEiJpssWnyqlOCm4G-IuDi80?utm_source=copy_url Image by DALL-E

We dive into data operations in today’s episode! We cover the idea that with all of the work we're doing in AI and ML data analytics analysis, you actually have to steward your data. We also cover processes controls, like what we have with DevOps in infrastructure, but with similar types of concepts (governance controls automation) around how your data is flowing in your system. Transcript: https://otter.ai/u/pesotDnHCCD5lyPVx795EZIhTlA?utm_source=copy_url Image by DALL-E

We dive into the chaos created by Broadcom’s acquisition of VMware. In this episode, we discuss what Broadcom is doing, why it's a problem, how enterprises are reacting, and what alternatives are on the market. We cover the whole mess in all its glory, and even provide some love for Broadcom. Resources: https://www.thestack.technology/vmware-is-killing-off-56-products-including-vsphere-hypervisor-and-nsx/ https://www.siderolabs.com/platform/saas-for-kubernetes/ Transcript: https://otter.ai/u/SO8PD-p8AHwwsKfGsNqolSoYTlk?utm_source=copy_url Image by DALL-E

Join us as we embark on a comprehensive journey to master intermediate and advanced skills crucial for operators, DevOps, and platform engineers. From scripting and service setup to running complex systems, we address the critical gap in training for building, automating, and maintaining resilient and robust systems. Over the coming months, the Cloud 2030 crew will delve into the core skill sets required in this rapidly evolving field. Our series will cover an array of topics, including tools, processes, and methodologies essential for excelling in tech operations. We plan to explore a variety of subjects, aiming to equip you with the knowledge to automate effectively and build resilient systems. This kickoff meeting sets the stage for a year-long exploration into the depths of tech operations, inviting you to contribute your expertise and curiosity. Prepare for an enlightening journey as we lay out our comprehensive Tech Ops agenda. Agenda: https://docs.google.com/document/d/1Yvr8loVNfkxKmaQN5XWEaskzrV9-OsJ4oeKnUcnQ90s/edit?usp=sharing Transcript: https://otter.ai/u/FP_1Ose_jJ7qLezuAVZjFovSyVE?utm_source=copy_url Photo by Bence Szemerey: https://www.pexels.com/photo/brown-wooden-frame-with-brown-metal-pipe-6804254/

Departing from our typical podcast format, today’s episode is part of a presentation that I've been preparing about comparing 125 year old house building architecture to modern DevOps. We also analyze as things that work and don't work. There are a lot of home maintenance stories and comparison notes. Particularly in the back half of the episode we get into how this type of challenge relates to Operations Management. Refereces: https://nationalpost.com/news/canada/after-the-second-world-war-canada-thought-it-would-be-a-good-idea-to-install-cardboard-sewer-pipes Transcript: https://otter.ai/u/jf8at50nf0KKQG7DrlQAAbCdqH4?utm_source=copy_url Image by DALLE: Victorian house with the second floor redesigned in a modern style, featuring extensive use of glass. Each image also includes the porch with rockers and a poodle.

After a brief hiatus, thecloud2030 group is back and deep in tech, talking about things that we think are going to come on the tech front, sans AI. In this episode, we take some time to go through Kubernetes, hardware, software, bill of materials, and some governance. This includes a smattering of predictions to get your year started off with a bang. From there, we are going to be moving into our tech-ops series. Find more details about that in today’s outro! Resources: https://www.theregister.com/2023/12/27/bruce_perens_post_open https://developersalliance.org/open-source-liability-is-coming/ Transcript: https://otter.ai/u/UQyqHKJ9oNd1SquAWWNqGp6oAcE?utm_source=copy_url Image by DALLE: cartoon images of a robot reviewing a long bill of materials on a scroll of paper.

How can companies, enterprises and individuals become more innovative? We investigate the idea of innovation and disruption and continue past where we were with the three horizons model in our previous discussions. Today’s podcast focuses on breaking this apart into adapting an agile disruption, the use of standardization and the cognitive dissonance of innovation, even digging down into distinguishing between inventions and applications. Transcript: https://otter.ai/u/XCidE-0xG7zNmIC7FKEwRrJsPi4?utm_source=copy_url DALL-E Prompt: a factor of rubber chickens getting stretching and upgraded into cybertoys

Let's celebrate the work that we've done as a community in the Cloud2030 group this year, and talk about some really exciting things we have planned for next year for 2024. It's remarkable to look back on how this podcast has evolved from a meeting place during COVID, as a place where we could have those hallway tracks that we had been missing, into something that is really discussing technology at the forefront. The forefront of not just tech, but actually of the human and business implications of that technology. Transcript: https://otter.ai/u/d4Rq_a_tdyihTp5EyzOsnRbQ8jg?utm_source=copy_url Image by DALL-E

This is our annual year review and prediction episode and it is a doozy. We talk through what has been an incredibly busy year in Open Source, cloud repre, repatriation, AI, ML, chatGPT. We laid down some really interesting insights and then looked forward not just into 2024 but two years of predictions and trends that we see happening. We cover what we think will be shaking, shaping and shaking the market. References https://basecamp.com/cloud-exit https://world.hey.com/dhh/the-big-cloud-exit-faq-20274010 Transcript: https://otter.ai/u/13Wdve1WQVCPjXtsf8QnJtg6r0M?utm_source=copy_url DALL-E Prompt: futeristic graphic of a hall of mirrors with 2023 turning into 2024 and the cast of the wizard of oz

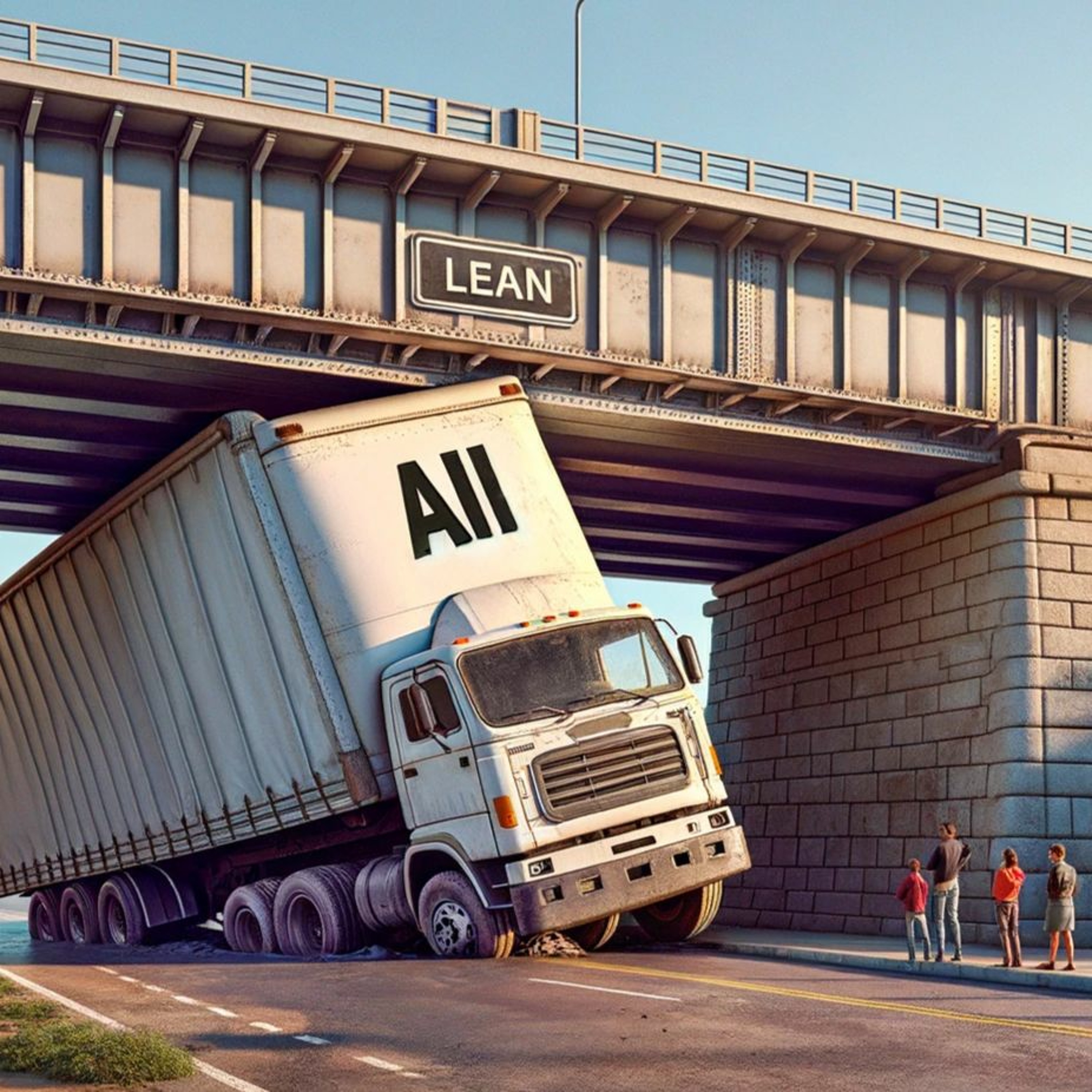

How do we apply the principles of lean to data science and data engineering? We discuss this broadly into using AI and machine learning more generally. This is a topic that we had discussed over the summer and wanted to come back to six months later because so much has changed and transformed in the industry. What does agile lean process control look like in an infrastructure automation platform? How can we make these very difficult and challenging components of data and data management, more agile, more lean? I think you will get a lot out of this conversation considering our current hypercharged AI ml and LM environment. Transcript: https://otter.ai/u/1ZuALgSXcPw-bIf2GOumDFZH0UU?utm_source=copy_url DALL-E Prompt: please create a picture of a very large truck stuck under a low bridge. please label the truck as ai and the bridge as lean

If you haven’t had a chance to join in on our book groups, I strongly recommend you take a look at the upcoming books we are reading! Today we discussed Data Science and Context, which is a relatively academic book by a series of doctors, PhDs, Specter, Norvig, Wiggins and Wing. The book gets into some really fascinating analysis techniques, addressing both the practical and ethical implications of data science applications. We discuss the biases inherent in the book, the things that are missing and potentially disruptive to the core assumptions of the book. So even if you haven't read this book, I think you will find the discussion fascinating. This week I'm keeping our warm up discussion about open AI in the podcast. So you will get about 10 minutes of bonus content before the book group discussion as a warm up and it is very related. Our conversations about what has been going on with open AI, their board and Q* are directly related to the concluding ideas in our discussion about Data Science and Context. Transcript: https://otter.ai/u/qYBKNhDBKqaghEaxE-I-mz6xDvg?utm_source=copy_url Image: Data Science In Context cover

How can digital identity be used to build better trust and systems in our daily transactions? There are really significant challenges and consequences to having a national guaranteed identity - a single identity provider. Knowing who you're interacting with, in every form, in every situation is not as simple as you might think. There's a lot of analogues to physical identity that are worth considering. What would it mean for us to not have privacy? Does identity mean we don't have privacy in our interactions? Who can we trust and what authority do they have? Transcript: https://otter.ai/u/o_43fyGjxu24Ur5rpzzy_D6aZCI?utm_source=copy_url Image by Dall-e prompte: a cartoon like image of a humanoid robot looking into a mirror and seeing a masked pirate version of itself

What incidental, or accidental, surveillance state is being created by all of the video and listening devices that are now embedded in our world? Today we talk through the ramifications of those networks being in private hands in which companies can actually review, analyze and monetize data from these systems. For example - autonomous vehicle cameras and delivery van cameras. This episode discusses the ramifications of this example and more. References: https://arstechnica.com/tech-policy/2023/11/five-big-carmakers-beat-lawsuits-alleging-infotainment-systems-invade-privacy/ https://www.vzbv.de/en/court-prohibits-linkedins-data-privacy-infringements https://last-chance-for-eidas.org/ Transcript: https://otter.ai/u/NszGAX95R70ydlW_fJcbDKAXwTM?utm_source=copy_url Image by Dall-e prompt: "1950s era cartoon of an autonomous car with a lot of cameras spying on people"

What is innovation? Today we continue this discussion, specifically drilling into the three horizons model for creating growth and value. We spend a lot of time talking about how companies innovate using that model, what it means and what are examples of it? How does that spark take place? We bridge you further down the innovation learning process in this meeting. Transcript: https://otter.ai/u/He9h2NVxazKMDN9a13TcurXsqXU?utm_source=copy_url Image by Dall-E prompt “please create a close up picture of a flock of birds navigating between three different horizons. the birds are smart and know which why they need to go”

We dive deep into the technical subject of governance and policy enforcement, including the tools, techniques and processes that you need to be aware of to do a good job with policy and governance enforcement. We cover how to get started, what to think about, what to be aware of, and chip away at your governance and policy challenges including developer development portals, infrastructure pipelines and DevSecOps. Transcript: https://otter.ai/u/ND90jKHwbklUBOAwT1XkgEo2pbs?utm_source=copy_url Image by Dall-E prompt “please make a carton that shows a regulator who is managing cloud and IT assets using impractical tools”

We dive into the dynamics of open source projects and monetization today, specifically starting around the TerraForm and open tofu split. That topic is one that we love to chew over and potentially over analyze, but today’s discussion is different. We go into how ecosystems are built both in open and proprietary and cloud systems, and look at sort of a historical perspective on what makes a project successful from an ecosystem perspective. We also dive into why some projects work like that, and why some projects don't. Today’s episode gives a new take on some of the dynamics going on in the open source communities through the lens of what happened with Open Tofu and TerraForm. Transcript: https://otter.ai/u/ONDvgS9yGMrSN-bXMTy9KMC-XPs?utm_source=copy_url Photo by James Wheeler: https://www.pexels.com/photo/lake-pebbles-under-body-of-water-1574181/

We discuss innovation, a favorite topic of ours, today. Instead of diving in for a structured conversation, we dove at the bait that was offered by Marc Andreessen in his techno optimist manifesto. If you haven't read it, I would suggest taking a moment to read it before you listen to the rest of the podcast, but you do not have to! It is definitely an interesting opinion piece about the power of innovation, which is why it was a good input for our discussion. We have our own unique perspective and a robust discussion about how innovation should work that tees up further conversations about the three horizons model for innovation. References: https://a16z.com/the-techno-optimist-manifesto/ Transcript: https://otter.ai/u/6qOpnFW0LMvh-rvZfwko93SC5CY?utm_source=copy_url Photo by RDNE Stock project: https://www.pexels.com/photo/woman-in-black-jacket-sitting-beside-woman-in-gray-sweater-7413891/

What does it take to implement governance and compliance, because they are process controls much more than individual technologies. Today we discuss that a lot of the talks seem to be about governance and compliance, and we have a fascinating discussion about governance compliance and Kubernetes. The idea that Kubernetes is maturing, losing the drama that is a hallmark of its first decade now and moving into a focus on managing how to control and have security, compliance and normality. Yet all of those things have a degree of tension with the vendors and users, which puts single choice compliance and governance in direct conflict with open source competitive ecosystems. This makes for a fascinating conversation where we touch on some really important issues for the industry. Transcript: https://otter.ai/u/mAkvsYgMYMp_W8BizkoSxliYgrg?utm_source=copy_url Image: Generated by Dall-E

How do we limit and regulate LLMs and AI? We approach this at multiple angles and look through what it's like to regulate this type of technology. If you're interested in the limits of any technology, and specifically how AI gets regulated, and where we're likely to impose legislative barriers or restrictions on this, then this will be a fascinating podcast for you. Transcript: https://otter.ai/u/8IsFB-H-U3XzpQ751l2fs_c3-vE?utm_source=copy_url Photo by Pixabay: https://www.pexels.com/photo/black-android-smartphone-on-top-of-white-book-39584/

What goes on behind the scenes with AI, and specifically data center infrastructure and hardware? We discuss broad ranging concerns, opportunities and market blockers around AI. We also address how deeply it can impact innovation companies' privacy legislation from the frame of hardware and automation. Today’s discussion leads us to a larger question of what unlocks innovation in general that we will address in future podcasts. Links: https://research.aimultiple.com/wp-content/webp-express/webp-images/uploads/2023/10/Total-funding-for-ai-chip-makers.png.webp Transcript: https://otter.ai/u/3FUaZ3m8JabYLyJZGHZnpjgd81Y?utm_source=copy_url Photo by Tim Samuel: https://www.pexels.com/photo/woman-handful-of-unhealthy-chips-6697286/

If you follow cloud2030 discussions or any of my podcasting over the last decade, Edge is a very interesting topic to me. Today’s episode is a short update on the state of the edge from a very specific position. In this discussion, I walk through with Josh why edge has been hard for us to nail down from a technology perspective. This is something of special interest to RackN as we keep honing and refining our IT edge infrastructure technology set. Transcript: https://otter.ai/u/OtzOtPvoyiAKZdxJjmE4q9HpSfo?utm_source=copy_url Photo by Khoa Võ: https://www.pexels.com/photo/unrecognizable-man-sitting-on-rooftop-edge-against-cloudy-sundown-sky-5780744/

The TerraForm fork, now known as the OpenTofu project, is our first topic in today’s episode. We discuss what's going on with that, the challenges, as well as the potential pressures from HashiCorp that created this whole situation. How do we get experts to recover their authority and how do we look at organizations like that? We have about 20 minutes of really involved conversation about the book, Death of Expertise by Tom Nichols, from the previous podcast. If you haven't heard our first part of the conversation, I suggest you go back and listen to our full Death of Expertise podcast. We cover two topics, one of them short term and one of them long term. So it’s a nice, balanced industry discussion around what the fork means, what its impacts are and a little bit of recap. There's some really spicy opinions around 32 minutes in if you want to jump forward, we resume our discussion about death of expertise. Transcript: https://otter.ai/u/zGUYDP6DynzxPBNLM9dcePneb7Q?utm_source=copy_url Photo by lil artsy: https://www.pexels.com/photo/person-about-to-catch-four-dices-1111597/

What are the potentials for biasing LLM models? We dive into biases both in good ways and in bad ways. Is the expertise that we're feeding into these models is not sufficient to actually drive the outcomes that we're looking for? We're going to be eliminating humans out of the loop in a relatively short period of time. Both outcomes, at the moment, feel equally probable, which is troubling. We dive into how and why that happens, what's going on, and some concrete tips for how you can improve your prompting to avoid these same pitfalls. Transcript: https://otter.ai/u/v3MaWiCWEe-G1ar2O0KpriJf9vU?utm_source=copy_url Photo by Marta Nogueira: https://www.pexels.com/photo/pink-and-blue-background-divided-diagonally-with-two-matching-colored-pencils-placed-on-opposite-colors-top-down-view-flat-lay-with-empty-space-for-text-17151677/

We continue our book group series today about the Death of Expertise by Tom Nichols, which is very dense with a lot of provocative and thought provoking comments, topics and ideas. It was so interesting that we decided we needed two sessions to fully unpack this. This is part one, which is about how expertise as a society is handled, how social media changes and the cyclical nature of confidence in our institutions, and how technology is shaped in buying patterns in use by expertise. If you’re interested, please participate in part two of the discussion! We also talked about the Dunning Kruger effect, the idea that the less you know about something, the more confident you are, and that gaining knowledge makes you more knowledgeable but also less falsely confident in how you present yourself. It’s a more complex topic than that very short summary. “It is difficult to get a man to understand something, when his salary depends on his not understanding it.” ― Upton Sinclair, I, Candidate for Governor Transcript: https://otter.ai/u/m--7wT4fRjdodT3qRuXs6N8VB4E?utm_source=copy_url Image is book cover

Can large language models effectively supplant developers and DevOps engineers? Today we go deeper into how the models can be trained, if they can be trusted, and what is the upside or positive use case in which we really turn LLMs into the type of weighing person experts that they have the potential to be versus simply something that turns up the volume on how fast you generate code. We also talked about the downsides of that type of model and the potential upsides of how powerful using these tools as assistants could emerge to be as a key aspect here to transform and improve the outcome for work. Transcript: https://otter.ai/u/u3bArfIvx40oUXnRLtSXwAx_Iuw?utm_source=copy_url Photo by Pixabay: https://www.pexels.com/photo/aeroplane-aircraft-airplane-aviation-33224/